Understanding Open-Ended Survey Questions in Market Research

Open-ended survey questions allow you to gather unique and varied responses that reveal why a product or service matters to customers. Unlike multiple-choice questions, which limit answers to rigid options, open-ended responses provide broader insights that can inform stronger decisions. For instance, asking “What do you like most about our product?” will deliver far richer feedback than a simple yes/no question or one with preset choices.

Advantages of Open-Ended Responses

Open-ended survey questions offer several advantages for market research:

- Capture the authentic customer voice: With no preset options, you can identify the tone, emotions, and language customers use, helping you to better understand a company’s target audience and align products with their needs.

- Surface unanticipated themes: Customers may highlight uses, frustrations, or benefits you hadn’t considered, revealing insights that can shape product or service development.

- Support hypothesis generation: Patterns in responses can spark new ideas or explanations. For example, if many mention issues around choosing the size of an item, a company might test whether unclear product details reduce conversions.

- Reveal the “why” behind loyalty: Open responses uncover the reasons customers stay, such as fast support or valuable features, which guide better business decisions.

Challenges of Analyzing Open-Text

Analyzing open-text survey data comes with several challenges:

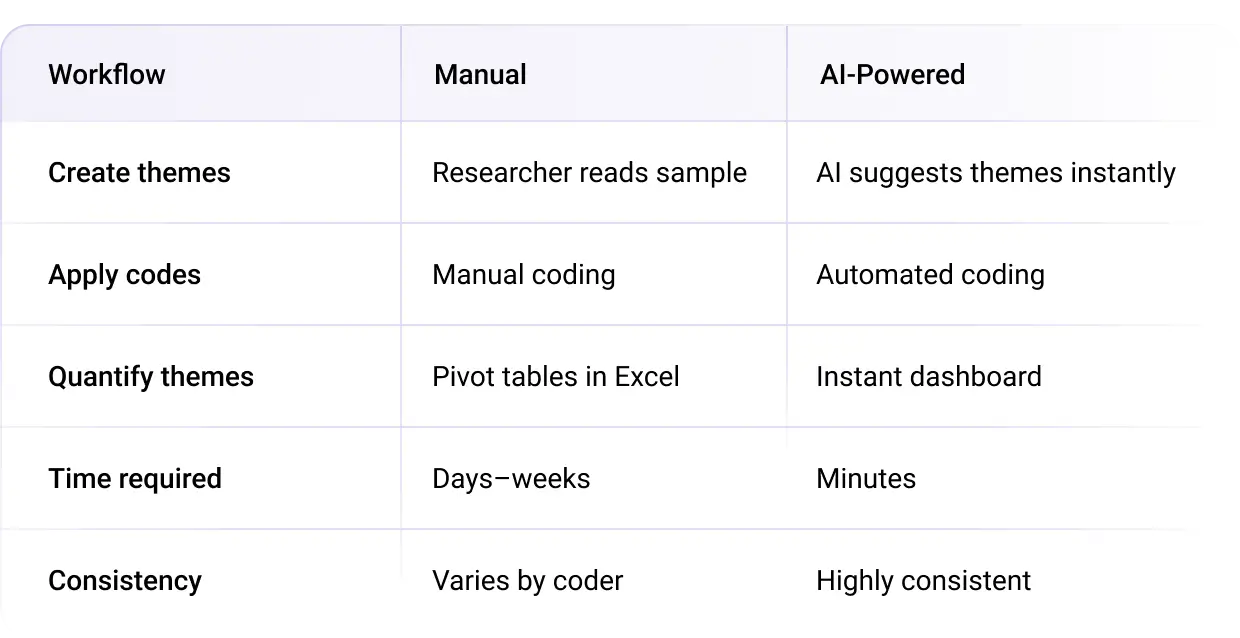

- Time intensity: Manual coding can take days or even weeks to categorize responses, especially with large datasets.

- Risk of inconsistencies: Human coding is subjective, and some AI systems require oversight to maintain accuracy and avoid inconsistent results.

- Language complexity: Customers use varied wording, slang, and tone, which older or less advanced software may struggle to interpret correctly.

Manual Coding

Here are some of the main steps involved in manual coding of open-ended survey responses:

- Data cleaning: Remove duplicates or errors so your dataset is accurate.

- Reading responses: Go through each response to identify underlying patterns or repeated ideas.

- Creating a coding framework: Build a list of categories such as Customer Service or Pricing, and then allocate each response to one of those categories.

- Counting frequencies: Quantify how often each category appears. For instance, if 156 out of 600 responses mention delivery issues, you’ll know this is a top concern.

There are a few different ways to approach manual coding. Some teams rely on researchers to read and code every response by hand. Others may experiment with general tools, like ChatGPT, to speed up parts of the process. And a common approach is to use Excel, which adds structure and some automation to the manual process.

Using Excel

With Excel, you can:

- Set up columns: Add Response Text and Category columns to organize data.

- Assign categories: Label each response under the right theme.

- Filter categories: Review all responses in the same category for consistency.

- Count with a pivot table: Insert a pivot table to see how many responses fall under each theme.

Limitations of Manual Coding at Scale

Manual coding of open-ended survey responses has several limitations that can slow down projects and weaken results.

- Time-consuming: Manually reviewing and coding thousands of responses can take up much of your staff’s time. That time could instead be spent on higher-value activities such as implementing business decisions, developing products, or supporting customers. Reducing the hours spent on manual coding can help your business move forward faster.

- Difficulty updating codes: As you analyze responses, new themes or issues often appear. You might discover a recurring complaint about a mobile app feature midway through coding. Manually, you would need to create a new category, then go back through all of the responses to recheck which ones fit—a process that is both tedious and error-prone.

- Overwhelming workload: If you’re faced with 10,000 or more responses, the sheer volume can quickly lead to mental fatigue and burnout. For companies that regularly collect feedback, manual coding is not sustainable long-term, as teams will struggle to keep up with analyzing multiple surveys without mistakes.

Lack of accuracy: Human judgment varies, which makes consistency difficult. For example, if customers say “the instructions weren’t clear,” one coder might label it under User Experience while another places it under Customer Support. These differences make it harder to fully trust the final analysis.

AI-Powered Coding for Open-Ended Responses

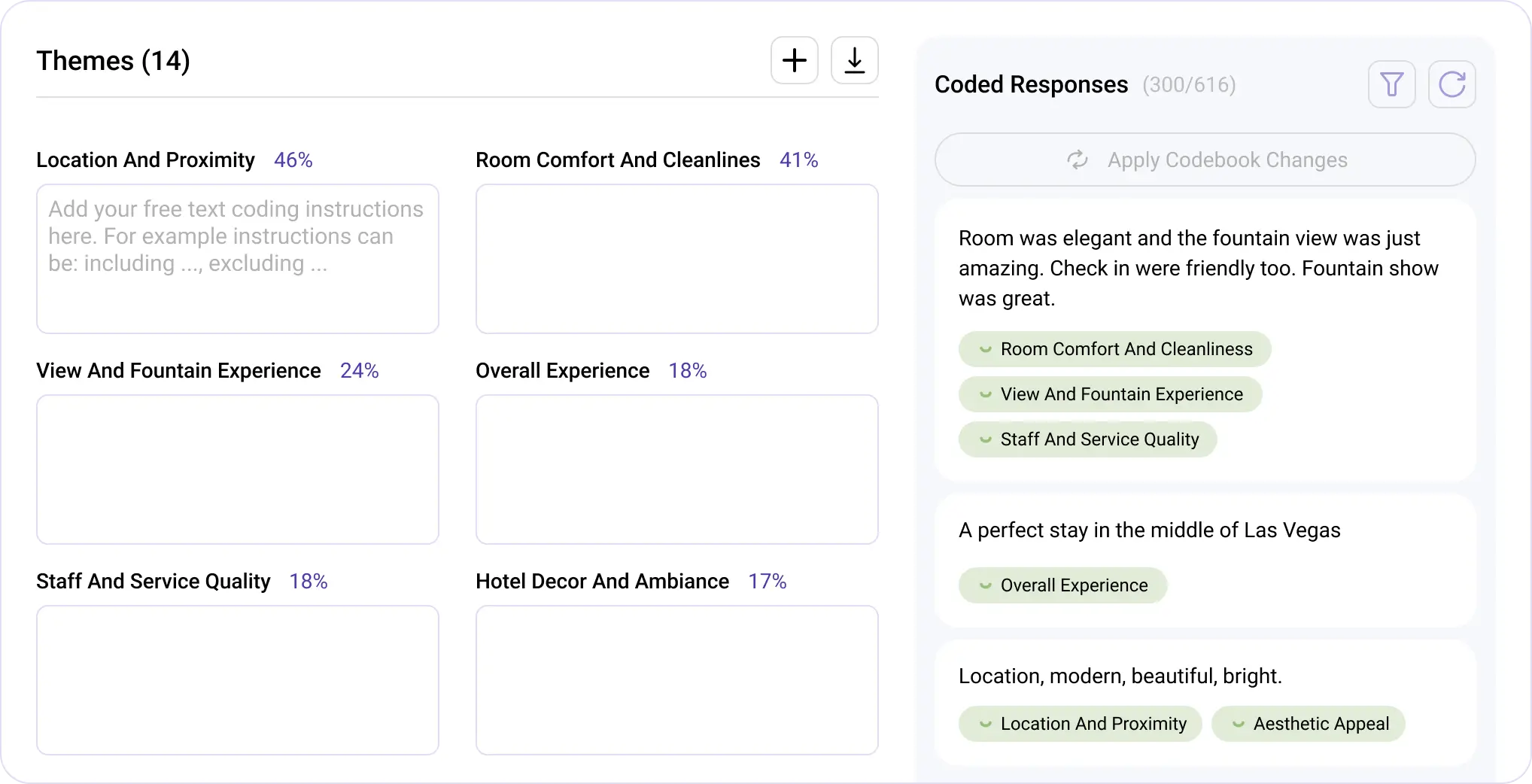

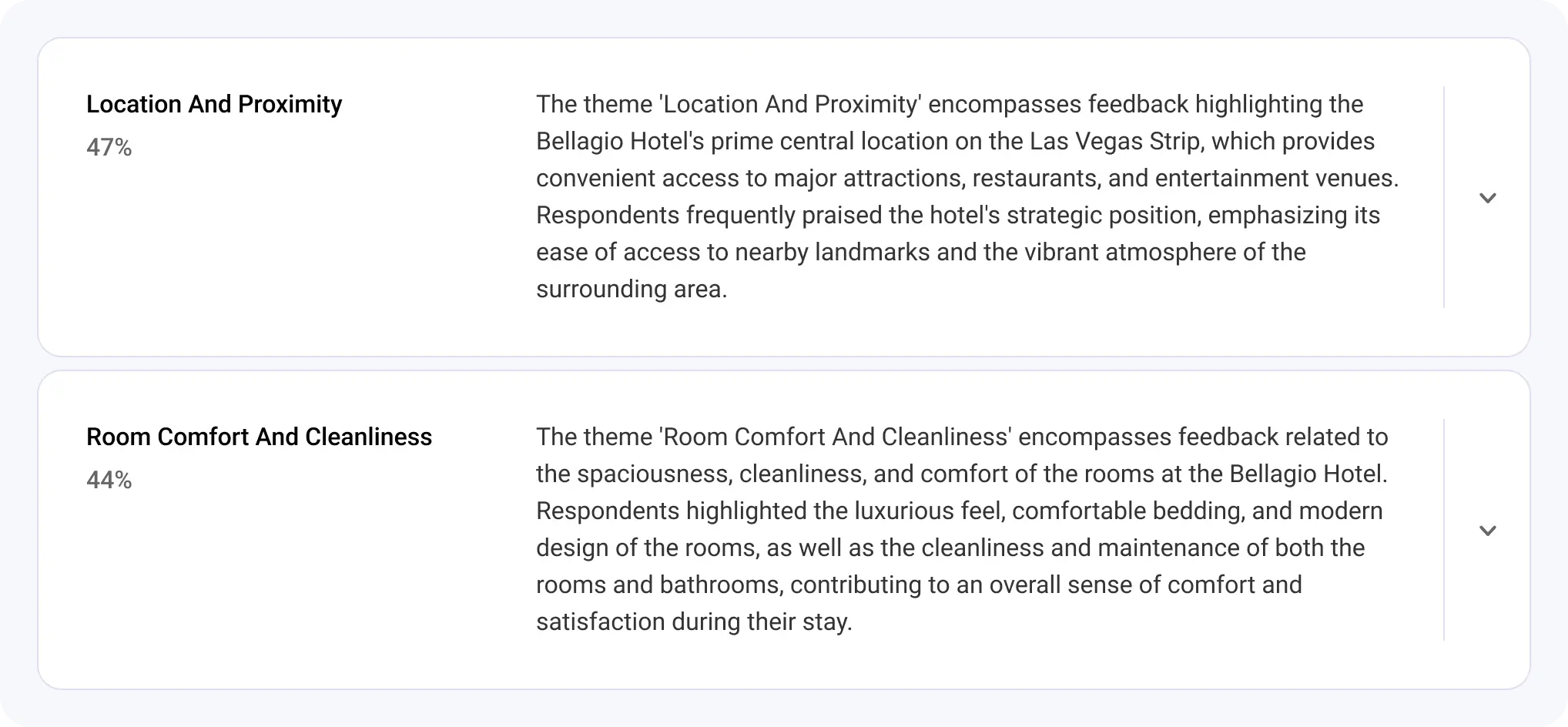

AI-powered coding uses artificial intelligence to automatically read and categorize open-ended survey responses. Instead of manually assigning categories to each answer, the software analyzes language patterns and groups similar responses together.

If 400 customers mention pricing concerns in different ways—such as “too expensive,” “not worth the cost,” or “prices are too high”—the AI can recognize the similarity and group them under a single theme like Pricing Issues.

How AI-Powered Coding Works

AI-powered coding has evolved through different models over the years. Natural Language Processing (NLP) was one of the earlier approaches, relying heavily on keyword matching and simple text rules. While this worked for very basic tasks, it often missed the nuances in customer responses. For example, if one customer wrote “shipping was delayed” and another wrote “my package didn’t arrive on time,” an NLP system might treat those as unrelated simply because the exact words don’t match.

Another approach is machine learning models, which could be trained to spot patterns and classify responses into themes. However, they require large amounts of pre-labeled data and ongoing adjustments to stay accurate. As a result, they often produce rigid outputs and struggle to adapt when unexpected themes appear in the data.

Today, large language models (LLMs)—like those built by OpenAI, Claude, and Google—power software solutions such as Blix, which takes a far more advanced approach to analyzing open responses by focusing on semantics rather than keywords. These systems interpret the actual meaning behind responses, much closer to how humans understand language.

For instance, if one person says “the website was easy to navigate” and another writes “the online interface was user-friendly,” an LLM recognizes both as the same positive sentiment. It can even account for spelling mistakes, emojis, and different languages, since the underlying meaning stays consistent. This meaning-based coding allows for more accurate and flexible categorization. Entire datasets can now be processed in minutes, turning thousands of open-text responses into quantifiable insights.

Advantages for Market Researchers

AI-powered coding offers several advantages that save time, improve accuracy, and make survey analysis more flexible.

- Speed and scalability: With an AI-powered tool, the work of an entire team can be completed in just minutes. Instead of having multiple staff manually code thousands of responses, one person can upload the data and let the software handle it automatically, freeing up hours of valuable time.

- Consistency across the dataset: AI applies the same rules across all responses, ensuring that similar comments are grouped the same way. Unlike human coders, who may interpret responses differently, AI keeps the results consistent and reliable.

- Sentiment analysis: AI can instantly detect positive, negative, or neutral sentiment behind responses to make sure important tones and emotions aren’t overlooked. For example, two customers might both mention delivery: one says “delivery was fast and reliable” (positive), while another says “delivery took too long” (negative). Grouping both simply under Delivery misses the underlying sentiment.

- Revising themes: Even after coding is complete, AI-powered analysis tools let you remove, split, or merge categories. This flexibility makes it easy to refine themes as new insights emerge.

- Exporting results: Once the analysis is done, you can export findings to Excel for deeper exploration or to PowerPoint for polished charts and ready-to-use presentation slides.

How AI Makes Quantitative Analysis Possible

With open-ended responses, the language is often unstructured because every customer phrases comments differently. AI can scan through this text, identify common ideas, and organize them into categories, turning scattered comments into structured information.

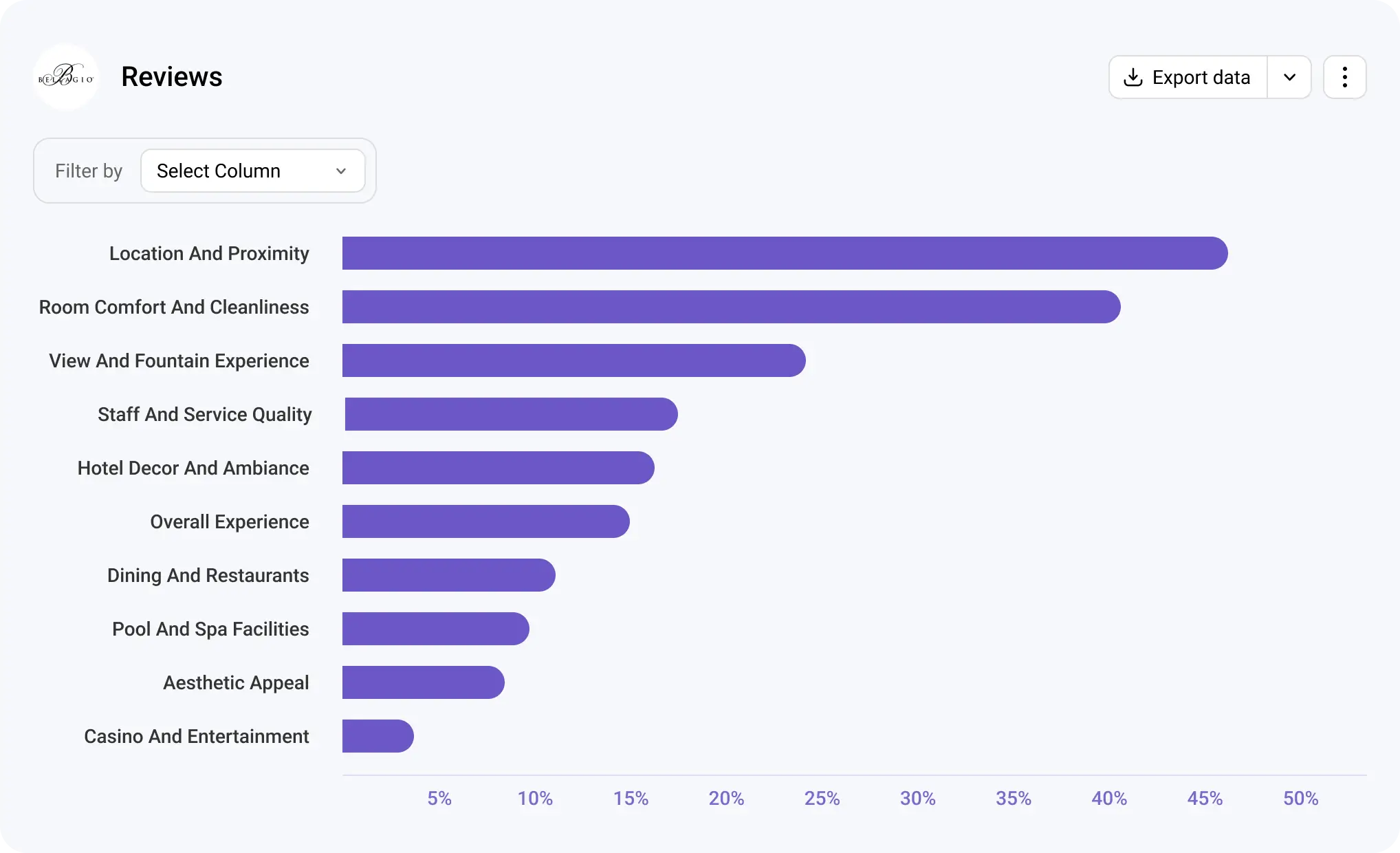

Once responses are grouped, AI-powered tools can create numeric patterns from the text. To illustrate, if hundreds of guests mention a hotel’s cleanliness or service quality in reviews, the system can calculate what percentage of reviewers raised those concerns. You might find that 45% of respondents brought up cleanliness—a clear data point you can compare against other metrics, such as customer satisfaction or repeat bookings.

This approach also makes it possible to track changes over time. For instance, if the hotel launches a new guest experience program, you might see positive mentions of service quality rise from 20% in one quarter to 40% in the next.

AI also makes it easy to present results visually. Structured data can be turned into:

- Bar charts for comparing top issues

- Heat maps or dashboards for identifying trends at a glance

These visualizations make complex feedback simple to understand and share with decision-makers.

The bar chart below has been generated using Blix. It shows how guest comments about the Bellagio Hotel were categorized and quantified. Each bar represents a theme pulled from hotel reviews—such as service quality or room comfort—while the percentages along the bottom indicate how often each theme appeared. Blix’s AI-powered tool turns open-ended feedback into measurable data.